This post goes through the deployment of a HA pair of F5 BIG-IP LTMs in Azure. Like with most vendors the F5 solutions is documented as part of ARM templates, I personally prefer to pick these things apart and first build them manually to better understand what is going on under the hood. A more cynical person may suggest they do this on purpose to try and hide all the fudges needed to make their solution work in a public cloud.

My initial idea in regards to high availability was to use BGP (configured in F5 kernel) to advertise the VIPs (more specific /32 routes) into Azure via an Azure Route Server. However, like with anything in Azure there are always constraints to throw a spanner in the works:

- System routes for traffic related to virtual network, virtual network peerings, or virtual network service endpoints, are preferred routes, even if BGP routes are more specific. Therefore, my /32 VIP addresses would have to be an alien range, not part of any Azure virtual network (VNET)

- Alien ranges advertised to the Azure route server will not be advertised anywhere else, it will ONLY advertise the address space of the VNET in which it resides along with any VNETs peered to it. Therefore, when you have multiple spokes and remote sites the fudges you have to implement to make it work would mean you lose any of the simplicity you are trying to gain with this BGP method

After discounting BGP I was left with the following LTM HA failover solutions:

- API HA (Active/Standby): Uses Azure API calls to failover between devices by moving LTM IPs and Azure route table next-hops

- AZ LB (Active/Active): The LTM VIP is also an Azure standard load balancer VIP forwarding traffic onto the LTM (in backend pool) with a destination of 0.0.0.0:port (all LTM VIPs are 0.0.0.0). Uses SNAT to ensure that the return traffic gets back to the LTM that load balanced it

- AZ LB with DNAT, aka Direct Server Return (Active/Active): The same as option2 but rather than using 0.0.0.0 it forwards traffic onto the LTMs using the true destination address of the LTM VIP (all LTM VIPs have a unique address)

For me option1 is a non-starter as the Azure API is sooooo slow, from past experience with Palos can take over 2 minutes for the API calls to failover. It wouldn’t as bad if that time was consistent, but the API is unpredictable and the times vary on each API call (is almost random). It seems illogical to have to put a LB before a LB, but if you need the F5 features you have no real choice. Option3 is my preferred option for the following reasons:

- VIPs use the same address on AZ LB and LTM VIP so is less confusing

- As LTM VIPs aren’t 0.0.0.0 (each has a unique IP) allows for hosting multiple different types of VIPs on the one pair of LTMs

Table Of Contents

Traffic Flow and Operation

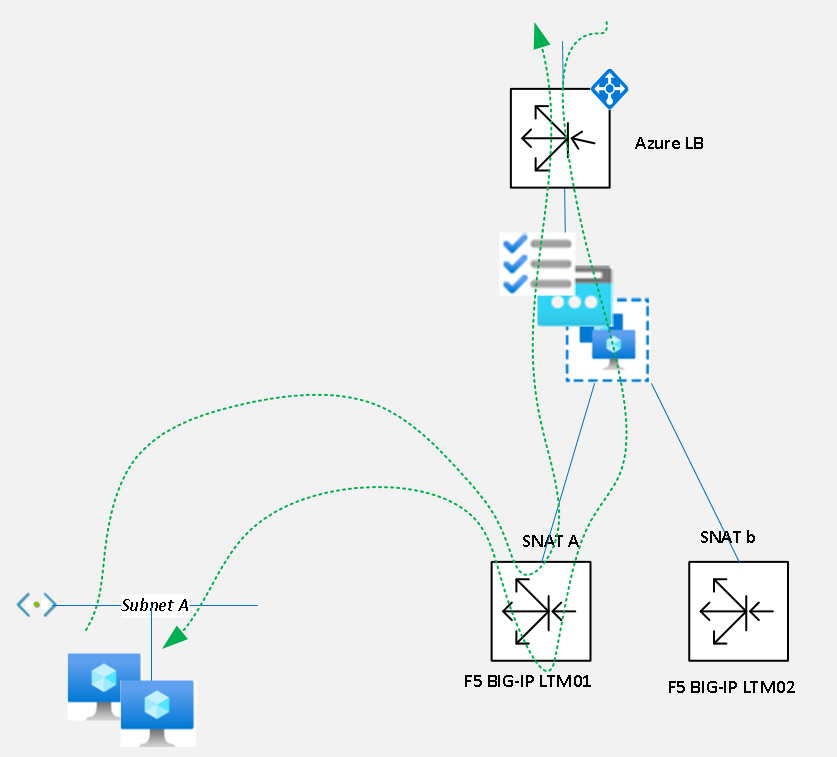

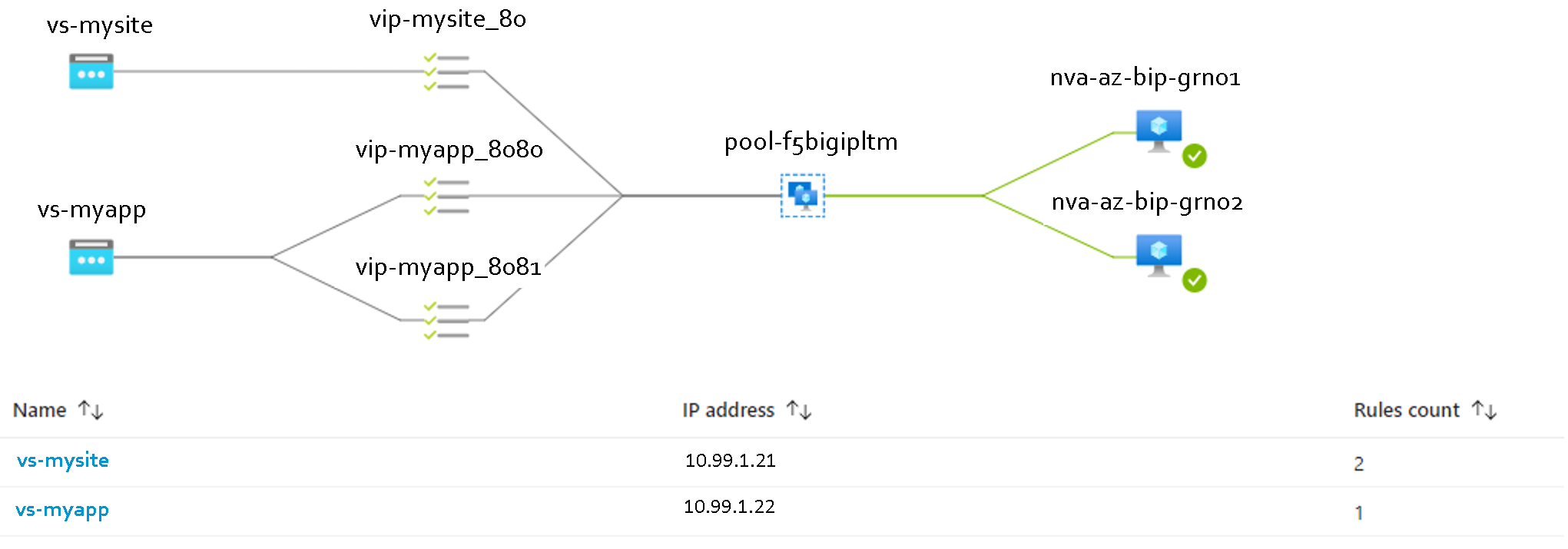

The traffic flow for connections to a VIP is as follows:

- Traffic comes into the Azure load balancer and based on the Azure load balancing rule is forwarded onto the backend pool of F5 LTMs on the backend port specified in the rule. New flows are shared between any LTMs that are deemed as healthy (passing the health probe), existing flows go back to the same LTM due to the Azure load balancer client IP session persistence

- The LTM load balances the traffic based on its load balancing algorithm forwarding it on to the true backend node whilst NATing the source address to the local F5 SNAT for that VIP. SNATs are specific to each F5 as they are a secondary IP on the F5 NIC

- The return traffic goes back via the same LTM as the source of the traffic is the secondary IP (SNAT) of a specific F5 device

Some important concepts and configurations in the setup and operation:

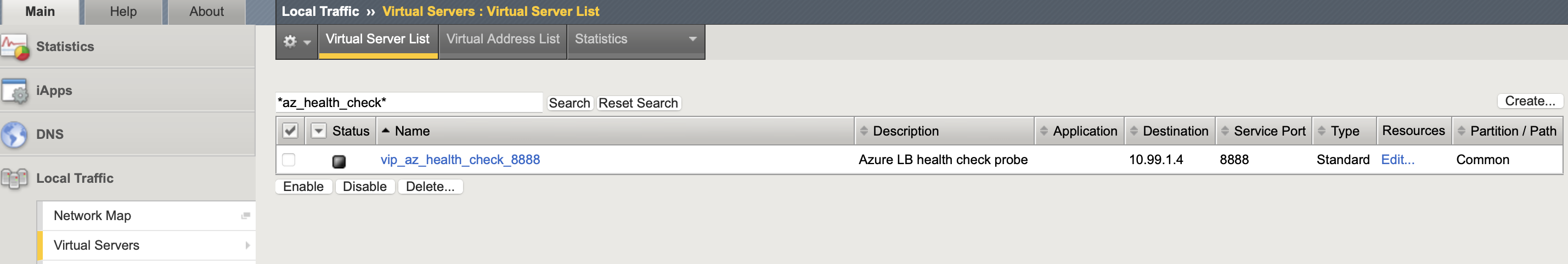

- Active/Active Traffic: All Azure load balancer VIPs always use the same health probe and pool of backend addresses, the F5s themselves. Each LTM has a dummy virtual server called vip_az_health_check_8888, it is this VIP that you would disable to take one of the F5s out of service as it will cause the F5 in the backend pool of the Azure LB VIPs to fail

- Active/Standby Configuration: The Active/Standby HA state on the actual LTMs is purely for the synchronisation of configuration. Failing over the HA state on the LTMs themselves will have no effect on the processing of traffic as the virtual address doesn’t use a floating traffic-group (LTMs normal mechanism with GARP for directing traffic to active LTM)

- Traffic-group none – Virtual address: The LTM virtual address has to be set as traffic-group none as without doing this the LTM will only process traffic for the VIP on the device that has the floating traffic-group, so the active device

- Traffic-group-local-only – SNAT address: The SNAT address has to be set as traffic-group-local-only so that it is not synchronised between the LTM HA pair, this allows for a different address on each F5. It is a requirement because the LTMs are in one-arm mode, without it the return traffic from the backend node would not comeback through the correct LTM

This is the lab setup that the following sections will go about creating.

Building the Azure Infrastructure

The below steps use Azure CLI to build the F5 BIGIP Network Virtual Appliances (NVAs) and Azure networking infrastructure that they will sit on.

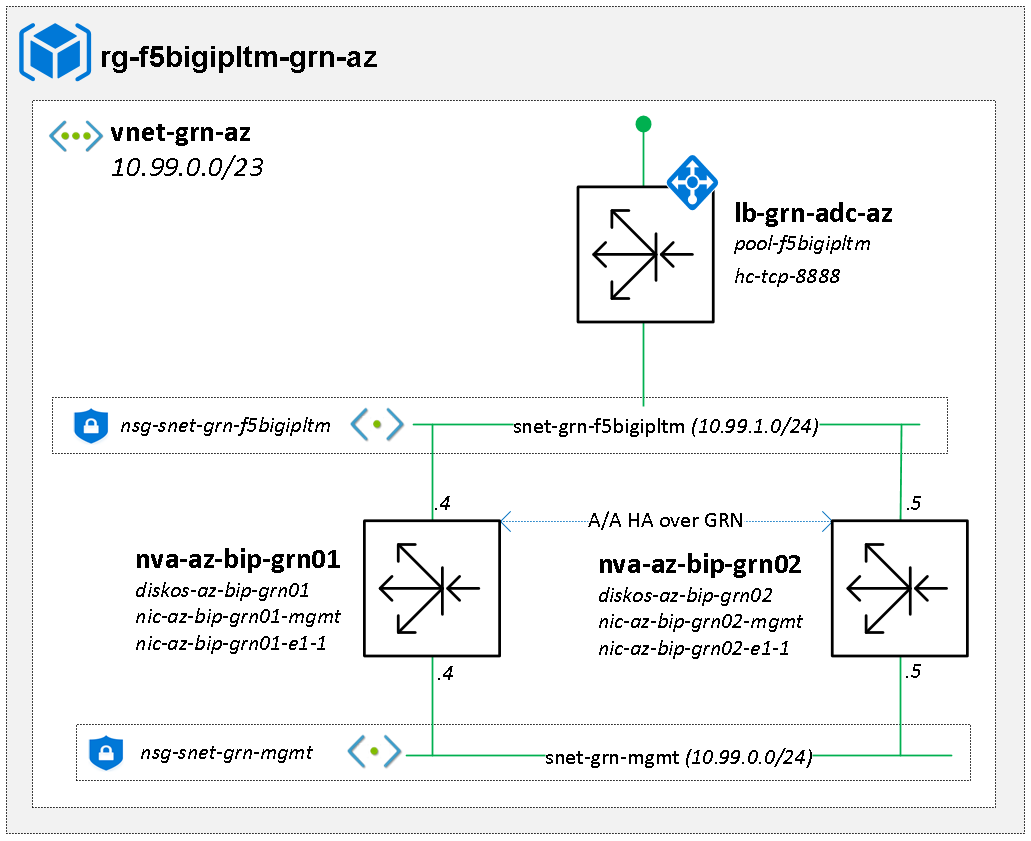

Azure Infrastructure (Resource group, NSG, VNET, subnet): There will be 2 subnets used by the F5s, one for management and the other for the load balanced traffic from which the SNAT and VIP addresses will come.

az group create --name rg-f5bigipltm-grn-az -l uksouth

az network nsg create -g rg-f5bigipltm-grn-az -n nsg-snet-grn-mgmt -l uksouth

az network nsg create -g rg-f5bigipltm-grn-az -n nsg-snet-grn-f5bigipltm -l uksouth

az network vnet create -g rg-f5bigipltm-grn-az -n vnet-grn-az --address-prefix 10.99.0.0/23 -l uksouth

az network vnet subnet create -g rg-f5bigipltm-grn-az --vnet-name vnet-grn-az --name snet-grn-mgmt --address-prefix 10.99.0.0/24 --network-security-group nsg-snet-grn-mgmt

az network vnet subnet create -g rg-f5bigipltm-grn-az --vnet-name vnet-grn-az --name snet-grn-f5bigipltm --address-prefix 10.99.1.0/24 --network-security-group nsg-snet-grn-f5bigipltm

Azure Load balancer (lb, backend pool, health probe): The base configuration (backend pool) used by all VIPs, it will hold all the F5s with a TCP service probe on 8888 (could use HTTP and/or different port). As this is a new Azure LB it cant be created without a frontend-ip (VIP) so create a dummy one that can be deleted later.

az network lb create -g rg-f5bigipltm-grn-az --name lb-grn-adc-az --sku Standard --vnet-name vnet-grn-az --subnet snet-grn-f5bigipltm --frontend-ip-name vs-dummy --private-ip-address 10.99.1.100 --backend-pool-name pool-f5bigipltm -l uksouth

az network lb probe create -g rg-f5bigipltm-grn-az --lb-name lb-grn-adc-az -n hc-tcp-8888 --protocol tcp --port 8888 --interval 5 --threshold 2

F5 BIGIP NVAs (nic, vm): The vCPU and memory dictate the number of F5 modules (LTM, AVR, DNS, etc) the device can support, the VM size has a direct relationship to the number of NICs and throughput. I am using the f5-big-all-2slot-byol image with 30 day trail from myF5, alternatively can use the f5-bigip-virtual-edition-25m-good-hourly Azure plan.

The NICs are first created with e1-1 added to the Azure LB backend pool (pool-f5bigipltm) before then being associated as part of the VM build.

az network nic create -g rg-f5bigipltm-grn-az -n nic-az-bip-grn01-mgmt -l uksouth --vnet-name vnet-grn-az --subnet snet-blu1-access-mgmt --private-ip-address 10.99.0.4

az network nic create -g rg-f5bigipltm-grn-az -n nic-az-bip-grn01-e1-1 -l uksouth --vnet-name vnet-grn-az --subnet snet-grn-f5bigipltm --ip-forwarding true --private-ip-address 10.99.1.4 --lb-address-pools pool-f5bigipltm

az vm create -g rg-f5bigipltm-grn-az -n nva-az-bip-grn01 --size Standard_DS2_v2 --zone 1 --nics nic-az-bip-grn01-mgmt nic-az-bip-grn01-e1-1 --image "f5-networks:f5-big-ip-byol:f5-big-all-2slot-byol:16.1.303000" --authentication-type password --admin-username netadmin --admin-password N0c00linazure! --computer-name az-bip-grn01 --os-disk-name diskos-az-bip-grn01 -l uksouth

az network nic create -g rg-f5bigipltm-grn-az -n nic-az-bip-grn02-mgmt -l uksouth --vnet-name vnet-grn-az --subnet snet-blu1-access-mgmt --private-ip-address 10.99.0.5

az network nic create -g rg-f5bigipltm-grn-az -n nic-az-bip-grn02-e1-1 -l uksouth --vnet-name vnet-grn-az --subnet snet-grn-f5bigipltm --ip-forwarding true --private-ip-address 10.99.1.5 --lb-address-pools pool-f5bigipltm

az vm create -g rg-f5bigipltm-grn-az -n nva-az-bip-grn02 --size Standard_DS2_v2 --zone 2 --nics nic-az-bip-grn02-mgmt nic-az-bip-grn02-e1-1 --image "f5-networks:f5-big-ip-byol:f5-big-all-2slot-byol:16.1.303000" --authentication-type password --admin-username netadmin --admin-password N0c00linazure! --computer-name az-bip-grn02 --os-disk-name diskos-az-bip-grn02 -l uksouth

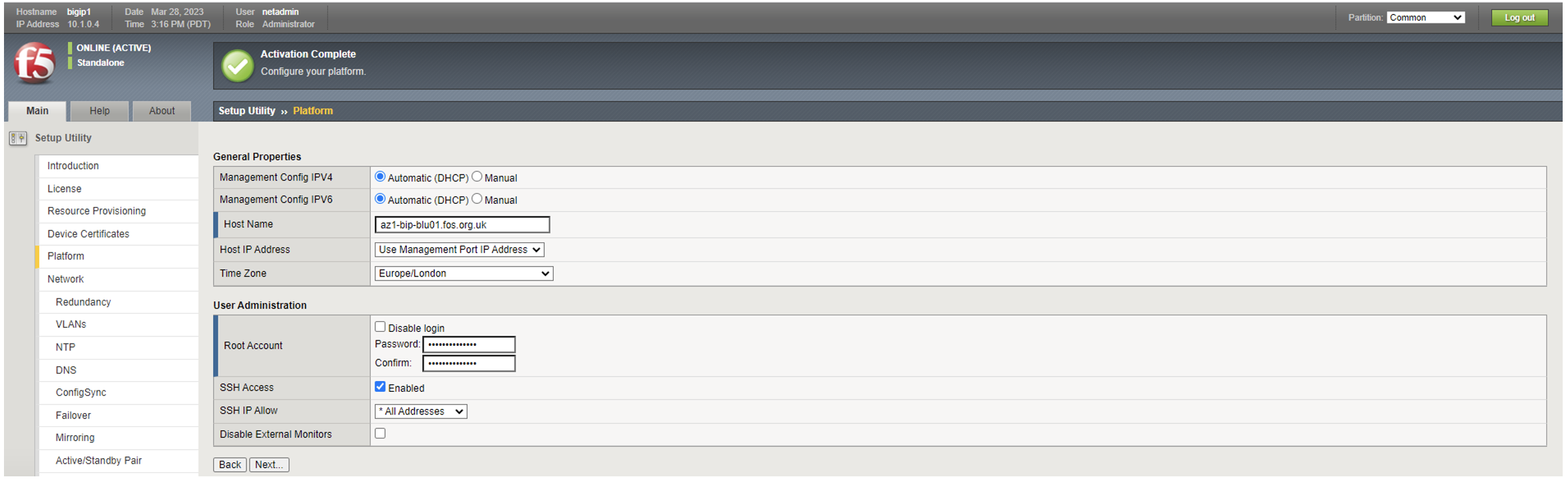

Base build of the F5 NVAs

The initial setup has to be done through the GUI, so enabling LTM in resource provisioning, licensing and setting Platform options such as set hostname and passwords. Skip Network setttings as is easier to do the rest of the device configuration from the CLI (enter TMOS using tmsh).

Interfaces: First thing to do is disable the functionality that enforces single NIC setup.

modify sys db provision.1nicautoconfig value disable

list sys db provision.1nicautoconfig

Add the VLAN, self-IP and default route for the data interface (e1-1) on each device.

create net vlan SNET-GRN-F5BIGIPLTM interfaces add { 1.1 }

create net self SNET-GRN-F5BIGIPLTM_SLF address 10.99.1.4/24 vlan SNET-GRN-F5BIGIPLTM traffic-group traffic-group-local-only allow-service default

create net route default gw 10.99.1.1

create net vlan SNET-GRN-F5BIGIPLTM interfaces add { 1.1 }

create net self SNET-GRN-F5BIGIPLTM_SLF address 10.99.1.5/24 vlan SNET-GRN-F5BIGIPLTM traffic-group traffic-group-local-only allow-service default

create net route default gw 10.99.1.1

High Availability: On each device configure the IP addresses used for configuration synchronizing between peers.

modify cm device az-bip-grn01.stesworld.com configsync-ip 10.99.1.4

modify cm device az-bip-grn01.stesworld.com unicast-address {{ ip 10.99.1.4 }}

modify cm device az-bip-grn01.stesworld.com mirror-ip 10.99.1.4

modify cm device az-bip-grn02.stesworld.com configsync-ip 10.99.1.5

modify cm device az-bip-grn02.stesworld.com unicast-address {{ ip 10.99.1.5 }}

modify cm device az-bip-grn02.stesworld.com mirror-ip 10.99.1.5

On one of the devices (dont need to do on both) establish the device trust (enter details of neighbor device), create the sync-failover device group and set the HA order with auto-failback (01 will always be the active with standby device waiting 60 seconds before failing back).

modify cm trust-domain add-device { ca-device true device-ip 10.99.1.5 device-name az-bip-grn02.stesworld.com username netadmin password N0c00linazure! }

create cm device-group dg-sync-failover devices add { az-bip-grn01.stesworld.com az-bip-grn02.stesworld.com } type sync-failover auto-sync enabled network-failover enabled

modify cm traffic-group traffic-group-1 ha-order { az-bip-grn01.stesworld.com az-bip-grn02.stesworld.com } auto-failback-enabled true

Can check sync status and force an initial sync by pushing local devices config to remote devices in the device-group.

list /cm trust-domain

show /cm sync-status

run /cm config-sync to-group dg-sync-failover

Should now have a healthy HA pair, the majority of the future config only needs adding on one device as it will be automatically replicated between the pair.

Azure Health probe and dummy VIP: Change the default CLI text editor to be nano (rather than VIM) and create the irule to answer Azure LB health probes.

modify cli preference editor nano

create ltm rule irule_az_health_check

Once you enter the last command it takes you into the editor, add the following text and save.

when HTTP_REQUEST {

HTTP::respond 200 content "OK"

}

The Azure Health check virtual server needs to be created on each devices as it uses the local self-ip (not a shared object) for the VIP address, the linked irule answers the Azure LB probes on port 8888 (rather than having a pool).

create ltm virtual vip_az_health_check_8888 destination 10.99.1.4:8888 ip-protocol tcp profiles add { http } rules { irule_az_health_check } description "Azure LB health check probe"

create ltm virtual vip_az_health_check_8888 destination 10.99.1.5:8888 ip-protocol tcp profiles add { http } rules { irule_az_health_check } description "Azure LB health check probe"

Network Services: Optionally set NTP, DNS, SNMP and logging.

modify sys ntp servers add { ntp.stesworld.com }

modify sys dns name-servers add { 1.1.1.1 8.8.8.8 } search replace-all-with { stesworld.com }

modify sys sshd banner enabled banner-text "stesworld --- authorised access only" inactivity-timeout 1200

modify sys snmp communities replace-all-with { srvsnmp01{ access ro source 1.2.3.4 community-name breath } srvsnmp02 { access ro source 2.3.4.5 community-name breath } }

modify sys snmp traps replace-all-with { srvsnmp01 { host 1.2.3.4 community breath port 162 network mgmt } srvsnmp02 { host 2.3.4.5 community breath port 162 network mgmt } }

modify sys syslog remote-servers add { srvlog01 { host 3.4.5.6 remote-port 514}}

Creating a VIP

The creation of a VIP is a 2 part process:

- Azure Load Balancer: A floating-ip VIP (set in LB rule) in essence means that traffic that comes into the VIP is forwarded onto the backend servers without changing the destination (VIP) address. For this reason all VIPs use the same backend-pool and health probe as this is not the true backend of the VIP (actual backend servers being load-balanced), the Azure Load Balancer forwards the traffic (uses a five-tuple hash for LB algorthimi) onto the healthy F5 LTMs to do the actual true backend load balancing

- F5 LTM: Operates much in same manner of a traditional load balancer, the virtual-server matches the Azure load balancer rules frontend-ip and port (service) and forwards traffic onto a pool which contains the actual backend servers that are to be load balanced. The SNAT pool consists of the secondary IPs from the F5s data NIC which are used by the LTM to connect to the backend servers

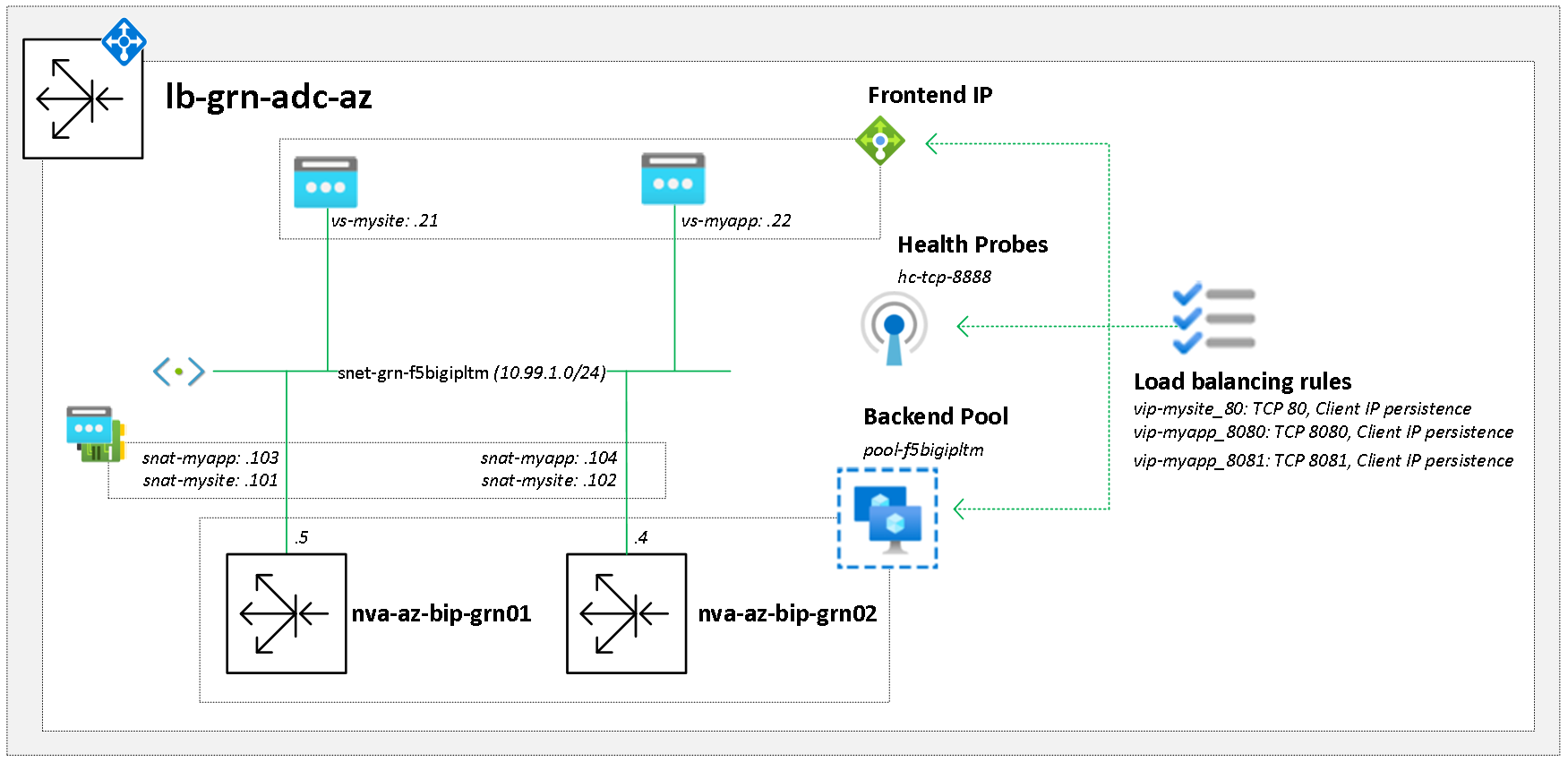

Azure load balancer configuration

Frontend IP: Is the VIP address that comes out of the snet-grn-f5bigipltm subnet range (10.99.1.0/24), is the equivalent of a LTM virtual-address.

az network lb frontend-ip create -g rg-f5bigipltm-grn-az --lb-name lb-grn-adc-az -n vs-mysite --subnet snet-grn-f5bigipltm --private-ip-address 10.99.1.21

az network lb frontend-ip create -g rg-f5bigipltm-grn-az --lb-name lb-grn-adc-az -n vs-myapp --subnet snet-grn-f5bigipltm --private-ip-address 10.99.1.22

Load Balancing Rule: Is required for every new VIP, links the frontend-ip to the service (port number). The same backend pool and health probe is used for all VIPs, it monitors the health of the actual F5 devices rather than the true backend servers.

az network lb rule create -g rg-f5bigipltm-grn-az --lb-name lb-grn-adc-az -n vip-mysite-80 --protocol tcp --frontend-port 80 --backend-port 80 --frontend-ip-name vs-mysite --backend-pool-name pool-f5bigipltm --probe-name hc-tcp-8888 --floating-ip true

az network lb rule create -g rg-f5bigipltm-grn-az --lb-name lb-grn-adc-az -n vip-myapp-8080 --protocol tcp --frontend-port 8080 --backend-port 8080 --frontend-ip-name vs-myapp --backend-pool-name pool-f5bigipltm --probe-name hc-tcp-8888 --floating-ip true

az network lb rule create -g rg-f5bigipltm-grn-az --lb-name lb-grn-adc-az -n vip-myapp-8081 --protocol tcp --frontend-port 8081 --backend-port 8081 --frontend-ip-name vs-myapp --backend-pool-name pool-f5bigipltm --probe-name hc-tcp-8888 --floating-ip true

SNAT address: A secondary IP address added to the NIC of each F5 (comes out of the snet-grn-f5bigipltm range) that ensures return traffic comes back to the same F5. This is optional as if there is no need to differente between VIP traffic on the backend (all traffic from F5 to all VIP backend servers comes from same F5 interface address) can use auto-snat on the F5 LTM which means no secondary IPs are needed.

az network nic ip-config create -g rg-f5bigipltm-grn-az -n snat-mysite --nic-name nic-az-bip-grn01-e1-1 --private-ip-address 10.99.1.101

az network nic ip-config create -g rg-f5bigipltm-grn-az -n snat-mysite --nic-name nic-az-bip-grn02-e1-1 --private-ip-address 10.99.1.103

az network nic ip-config create -g rg-f5bigipltm-grn-az -n snat-myapp --nic-name nic-az-bip-grn01-e1-1 --private-ip-address 10.99.1.102

az network nic ip-config create -g rg-f5bigipltm-grn-az -n snat-myapp --nic-name nic-az-bip-grn02-e1-1 --private-ip-address 10.99.1.104

LTM load balancer configuration

As per any HA LTM the configuration only has to be added on 1 device and the HA will replicate it to all others. There are however 2 important device specific configurations required to allow for the Active/Active passing of traffic and return traffic.

SNAT address: The SNAT pool is a shared object but the membership is local as the SNAT IPs are device specific. The SNAT IP is added on each device individually and the traffic-group set to local-only to ensure that it is not replicated between the HA pair. Until the SNAT pool has member on each device sync will fail with the message “must reference at least one translation address”.

-az-bip-grn01

create ltm snat-translation 10.99.1.101 { address 10.99.1.101 traffic-group traffic-group-local-only }

create ltm snat-translation 10.99.1.103 { address 10.99.1.103 traffic-group traffic-group-local-only }

create ltm snatpool snat_mysite members add { 10.99.1.101 }

create ltm snatpool snat_myapp members add { 10.99.1.103 }

-az1-bip-grn2

create ltm snat-translation 10.99.1.101 { address 10.99.1.101 traffic-group traffic-group-local-only }

create ltm snat-translation 10.99.1.103 { address 10.99.1.103 traffic-group traffic-group-local-only }

create ltm snatpool snat_mysite members add { 10.99.1.102 }

create ltm snatpool snat_myapp members add { 10.99.1.104 }

Backend Pool and Nodes: The true backend servers that traffic will be load balanced to, if they dont already exist the nodes will automatically be created. One pool gets a custom HTTP heath probe whilst the others use the default TCP heath probe.

create ltm monitor http hc_http_mysite defaults-from http interval 30 timeout 91 send "GET /healthcheck.txt HTTP/1.1\r\nHost:myhr" recv "HTTP/1.1 200 OK"

create ltm pool pool_mysite_80 load-balancing-mode least-connections-node members add { az_svr_webapp01:80 { address 10.99.2.11 } az_svr_webapp02:80 { address 10.99.2.12 }} monitor hc_http_mysite

create ltm pool pool_mysite_8080 load-balancing-mode least-connections-node members add { az_svr_webapp01:8080 { address 10.99.2.11 } az_svr_webapp02:8080 { address 10.99.2.12 }} monitor tcp

create ltm pool pool_mysite_8081 load-balancing-mode least-connections-node members add { az_svr_webapp01:8081 { address 10.99.2.11 } az_svr_webapp02:8081 { address 10.99.2.12 }} monitor tcp

VIPs: Create the VIPs by specifying the IP and service (port number) as well as linking the SNAT and backend server pools to the VIP.

create ltm virtual vip_mysite_80 destination 10.99.1.21:80 ip-protocol tcp pool pool_mysite_80 source-address-translation { pool snat_mysite type snat } description "my website"

create ltm virtual vip_mysite_8080 destination 10.99.1.22:8080 ip-protocol tcp pool pool_mysite_8080 source-address-translation { pool snat_myapp type snat } description "my app on 8080"

create ltm virtual vip_mysite_8081 destination 10.99.1.22:8081 ip-protocol tcp pool pool_mysite_8081 source-address-translation { pool snat_myapp type snat } description "my app on 8081"

Modify virtual addresses: On each device change the virtual address traffic group to none, without this only one LTM (active in LTM HA) would process traffic.

modify ltm virtual-address 10.99.1.21 traffic-group none

modify ltm virtual-address 10.99.1.22 traffic-group none

High Availability and Failover

The F5 LTM high Availability feature is soley for configuration suncronisation, it has no effect on the actual processing of traffic. Both LTM appliances will have the same configuration in terms of nodes, pool-members, pools and virtual servers, this is the kind of information that is synced by the dg-sync-failover device-group.

By default the IP address objects (virtual-address and SNAT) are a member of the floating traffic-group, in a traditional HA setup this is how the IP addresses are moved between devices during failover (with the help of GARP). As these addresses are device specific in this Azure setup they are changed to be either traffic-group-local-only (non-floating) or traffic group none. The failover state and failover options such as force offline or force standby are irrelevant as there are no floating IP addresses.

Traffic failover is triggered by making the F5 fail the Azure load balancer health probe by disabling the Azure health check VIP (vip_az_health_check_8888) on the corresponding LTM.

Reference

F5 cloud deployment matrix

DevCentral Lightboard Lessons: BIG-IP Deployments in Azure Cloud

More info on HA with AZ LB and DSR

F5 BIG-IP Virtual Edition in Microsoft Azure

Deploying the BIG-IP VE in Azure - ConfigSync Cluster: 3 NIC

Microsoft Azure: F5 BIG-IP Virtual Edition Single NIC config sync

Microsoft Azure Vnet: Multi-NIC F5 BIG-IP Virtual Edition - Follow bouncing ball

Disabling single NIC setup

Info on 13 onwards behaviour were cant disable Network Failover